Several articles at Smithsonian.com about rapid (image) capture reminded me of the connections to our IS&T Archiving Conference community. Briefly, Rapid Capture is used to describe high-speed image capture and storage for large digitization projects. The Rapid Capture Team* was formed with members from several cultural institutions including Ken Rahaim, Smithsonian Institution, who has been a member of the conference Program Committee. Recent examples of Rapid Capture Pilot Projects include;

Several articles at Smithsonian.com about rapid (image) capture reminded me of the connections to our IS&T Archiving Conference community. Briefly, Rapid Capture is used to describe high-speed image capture and storage for large digitization projects. The Rapid Capture Team* was formed with members from several cultural institutions including Ken Rahaim, Smithsonian Institution, who has been a member of the conference Program Committee. Recent examples of Rapid Capture Pilot Projects include;

- Thomas Sears Collection at the Archives of American Gardens (photographic plates)

- Bumblebees, from the National Museum of Natural History (45,000 bees)

- Historical Currency (printing) Proofs, from the National Numismatic Collection housed at the Smithsonian’s National Museum of American History (250,000 items)

- Freer Study Collection, Freer Sackler Museum

- National Air and Space Museum

Large-scale digital collection acquisition is made practical by using automated methods for monitoring imaging performance, and custom built hardware systems for object handling.

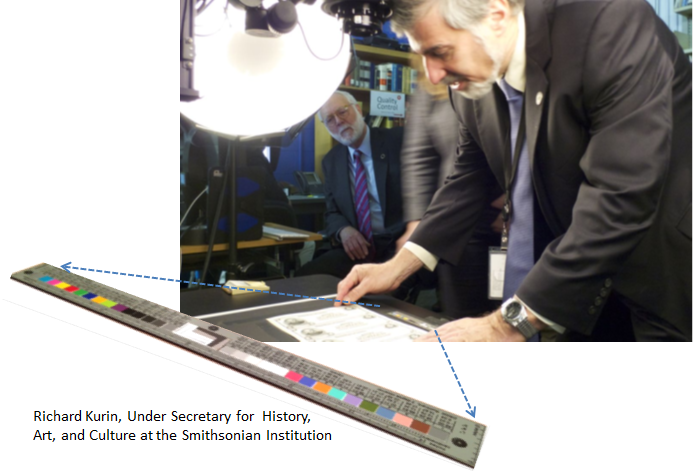

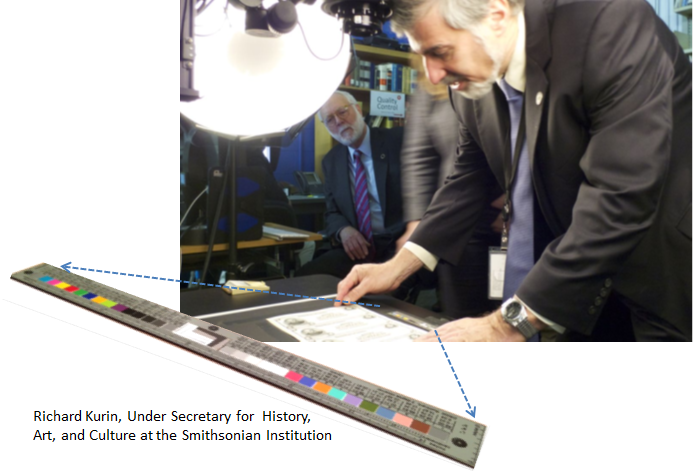

Test Targets: Here Richard Kurin, Smithsonian Institution, checks out the imaging for the Historical Currency collection (Photo by Günter Waibel). The Object-level Test Target, shown expanded, is used to monitor the camera focus, lighting, color capture, etc. Such targets and associated software, are used in quality assurance programs, now part of national and international  guidelines for digitization for repositories and museums – frequently presented at Archiving Conferences. Speaking of targets, here is the same one being used as part of the Bumblebees project – 6000 bees were captured in the first two weeks. (Photo from Smithsonian Digitization Facebook page) According to Tim Zaman this may be the Most Digitized Test Target in the World. A bold statement, until you consider that it is designed to be captured alongside each object, and has been used in four of the five projects mentioned above, and many others in the US and Europe for several years. (more on this in another blog posting …)

guidelines for digitization for repositories and museums – frequently presented at Archiving Conferences. Speaking of targets, here is the same one being used as part of the Bumblebees project – 6000 bees were captured in the first two weeks. (Photo from Smithsonian Digitization Facebook page) According to Tim Zaman this may be the Most Digitized Test Target in the World. A bold statement, until you consider that it is designed to be captured alongside each object, and has been used in four of the five projects mentioned above, and many others in the US and Europe for several years. (more on this in another blog posting …)

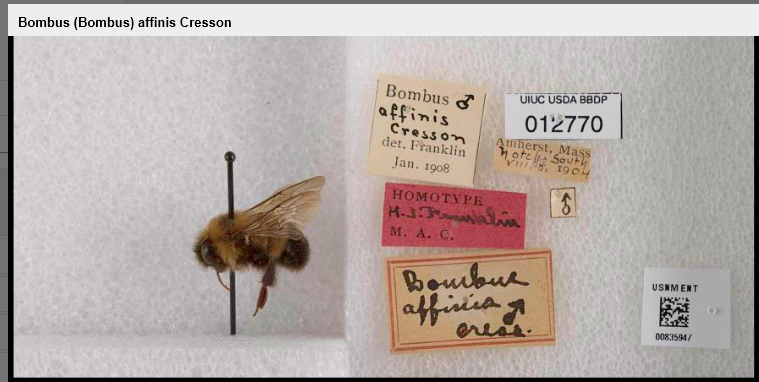

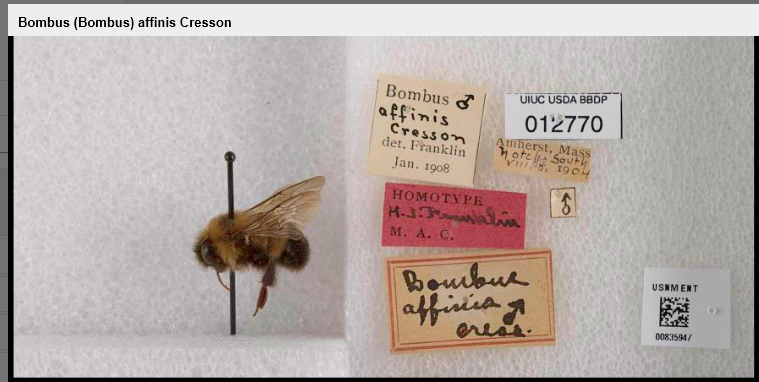

Hardware: For very large projects, fully automated conveyor-belt systems are employed. Here is a frame from a video showing the operation of a system delivered and set up by Picturae BV. Olaf Slijkhuis is shown in this belt’s eye view. Olaf also presents frequently at the Archiving Conference series, most recently in Berlin 2014. Metadata: At the Archiving Conference imaging is just one part of the program which also includes sessions on digital preservation, forensics and curation, and metadata verification. So here is a nod to metadata. Below is an example image from the Bumblebees collection. We see the various note cards that were captured along with the insect. Presumable they all mean more or less Bombus Affinis Cresson ♂ .

Metadata: At the Archiving Conference imaging is just one part of the program which also includes sessions on digital preservation, forensics and curation, and metadata verification. So here is a nod to metadata. Below is an example image from the Bumblebees collection. We see the various note cards that were captured along with the insect. Presumable they all mean more or less Bombus Affinis Cresson ♂ .

Et Alii: The IS&T Archiving Conference is in its eleventh year and many participants have contributed to the development of the methods and tools which support rapid image capture and verification for the cultural heritage community. Although my list will be incomplete, for those interested, I suggest submitting the following terms to your favorite search engine; FADGI, Metamorfoze, Image Engineering, Imatest, digital workflow, archiving conference … or ask via comments on this page.

For more information on this post:

1. The object-level test target was developed by Don Williams of Image Science Associates. (I make no commission on Don’s target sales, but do have fun writing software to analyze performance and improve images based on test target images).

2. Mission Not Impossible: Photographing 45,000 Bumblebees in 40 Days Smithsonian Magazine

3. Museums Are Now Able to Digitize Thousands of Artifacts in Just Hours Smithsonian Magazine

4. Picturae BV

5. Presentations: Don and I will be presenting IS&T’s Archiving Conference next month at the Getty Museum in Los Angeles. More information at Upcoming Events

_________________

* I believe that Captain Capture is also a member of the team. He usually attends meetings remotely, but flies in occasionally.

– Peter Burns