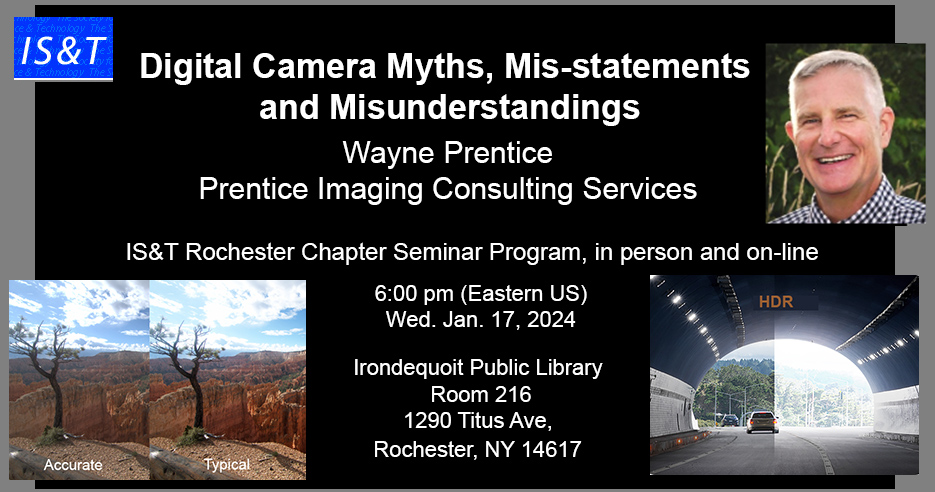

On Wednesday, 17 Jan. 2024 Wayne Prentice will be presenting as part of the Society for Imaging Science and Technology (IS&T) Rochester NY chapter seminar series. This meeting will be hybrid; in-person, and by Zoom video conference.

Place: Irondequoit Public Library, (Room 216) 1290 Titus Ave, Rochester, NY 14617 (in person) Time: 6:00 pm

On-line: https://lnkd.in/gg8vxVj2 (Meeting 859 2672 3473)

The digital cameras are deceptively complex. Understanding camera operation/design requires some knowledge of the parts, including, radiometry, optics, sensors, sensor design, image processing, color science, perception, and image/video encoding. It is easy to miss something.

This talk was inspired by interactions with co-workers and clients. I have observed that subtle, yet important, points when missed can lead to suboptimal product and design decisions. The goal of this talk is to fill in some of those gaps.

Wayne has worked in the imaging industry for over 35 years, for systems ranging from X-ray, CAT scanners, MRI, extra-terrestrial imaging, and digital cameras. Much of his digital camera experience came from 17 years working at Kodak R&D, for digital cameras. At Kodak Wayne became the lead image scientist and manager for Digital Camera R&D group. He has worked as an independent contractor over the past 5 years, providing solutions to a wide range of imaging challenges, mostly developing custom camera applications, computer vision, and HDR imaging.